Scraping canteens' daily lunch offers using R

The lunch offers at ETH Zurich are great and there is plenty of canteens to go to, on both locations of the campus in the city centre of Zurich as well as on the Hönggerberg. This variety of lunch options sometimes make it hard to decide where to go. Thus, I often looked at each of my favorite canteen’s menu table, to see, which menu appeals to me the most. Especially, if one wants to get a quick overview over the daily offers with some restrictions like vegetarian menus, this approach is cumbersome.

Thus, I wrote a small scraping tool for R, that can tell me every day, which vegetarian menus are offered at every canteen at ETH Zentrum.

Webscraping basically means to retrieve content from a specific website automatically. There is the powerful tidyverse package rvest for this purpose and there are plenty tutorials on its usage (see for example this short intro by Hadley or its documentation). To display the final table I used the packages grid and gridExtra.

Moreover, if one scrapes a web page, it is a form of web etiquette to ask first (automatically 😉), whether it is allowed to extract information from the page. For this purpose, Dmytro Perepolkin developed the polite package for R, which we will use to meet web etiquette standards (documentation).

library(rvest)

library(gridExtra)

library(grid)

#remotes::install_github("dmi3kno/polite") # to install

library(polite)# the polite package also requires some functions (see https://www.ddrive.no/post/be-nice-on-the-web/)

`%||%` <- function(lhs, rhs) {

if (!is.null(lhs) && length(lhs) > 0) lhs else rhs

}

polite_fetch_rtxt <- memoise::memoise(

function(..., user_agent, delay, verbose){

rt <- robotstxt::robotstxt(...)

delay_df <- rt$crawl_delay

crawldelays <- as.numeric(

delay_df[with(delay_df,useragent==user_agent),"value"]) %||%

as.numeric(delay_df[with(delay_df, useragent=="*"), "value"]) %||% 0

rt$delay_rate <- max(crawldelays, delay, 1)

if(verbose){

message("Bowing to: ", rt$domain)

message("There's ",nrow(delay_df),

" crawl delay rule(s) defined for this host.")

message("Your rate will be set to 1 request every ",

rt$delay_rate," second(s).")}

rt

})

check_rtxt <-function(url, delay, user_agent, force, verbose){

url_parsed <- httr::parse_url(url)

host_url <- paste0(url_parsed$scheme, "://", url_parsed$hostname)

rt <- polite_fetch_rtxt(host_url, force=force, user_agent=user_agent,

delay=delay, verbose=verbose)

is_scrapable <- rt$check(paths=url_parsed$path, bot=user_agent)

if(is_scrapable)

Sys.sleep(rt$delay_rate)

else

warning("robots.txt says this path is NOT scrapable for your user agent!")

is_scrapable

}

polite_read_html <- memoise::memoise(

function(url, ..., delay = 5,

user_agent=paste0("polite ", getOption("HTTPUserAgent"), "bot"),

force = FALSE, verbose=FALSE){

if(!check_rtxt(url, delay, user_agent, force, verbose)){

return(NULL)

}

old_ua <- getOption("HTTPUserAgent")

options("HTTPUserAgent"= user_agent)

if(verbose) message("Scraping: ", url)

res <- httr::GET(url, ...)

options("HTTPUserAgent"= old_ua)

httr::content(res)

})To get the content from the pages, one first needs to understand, how the URL-address to the menu table of each canteen works. For example, the URL for the Polysnack restaurant, where they serve delicious pizza 🍕, looks like the following: https://ethz.ch/de/campus/erleben/gastronomie-und-einkaufen/gastronomie/menueplaene/offerDay.html?language=de&id=13&date=2020-02-21, where id=13 calls the menu for the polysnack canteen, of the given date (Friday 21st of February).

The URLs for the other canteens all are built in the same way, by combining a common path, the id for the canteen and the date with ampersands. After finding out, which ids stood for which canteens, we can easily combine all the paths.

base <- "https://www.ethz.ch/de/campus/erleben/gastronomie-und-einkaufen/gastronomie/menueplaene/offerDay.html?language=de&id="

# ids

# 4 = Clausiusbar

# 6 = Dozentenfoyer

# 12 = Polyterrasse

# 13 = Polysnack

# 14 = Tannenbar

# 28 = Food&Lab

# 11 = GESSbar

today <- format(Sys.time(), "%Y-%m-%d")

# to get the URL

paste0(base, 4, "&date=", today)## [1] "https://www.ethz.ch/de/campus/erleben/gastronomie-und-einkaufen/gastronomie/menueplaene/offerDay.html?language=de&id=4&date=2020-02-21"MENSA <- data.frame(Place=NULL, Menu=NULL)

Menu <- NAI also initialized an empty dataframe MENSA with the canteen and menu columns. When putting the ids in a vector, over which a for-loop iterates, we can extract the information from each canteen’s page. Using the polite_read_html function, we ask for permission to scrape the data and extract the content of the whole page. Then, using the html_table function (one could also use CSS-selectors) I extracted the specific text containing the menu. I also omitted unnecessary text such as “Details einblenden” and chose only the option, that is most often the vegetarian one 🥗 (sometimes called “GARDEN” or “Favorite”).

for(i in c(4,11,12,13,14,28)){ # all the canteen id's

path <- paste0(base, i, "&date=", today )

webpage <- polite_read_html(path)

menus <- webpage %>% html_table(fill=TRUE, header=TRUE)

menus <- data.frame(menus)

menus$Details <- gsub("Details einblenden","", menus$Details)

menus$Details <- gsub("Add on: XXL (+1.00/1.50)","", menus$Details)

if(i ==4) { menus <- menus[menus$Menülinie=="GARDEN","Details"];

newline <- data.frame(Place="Clausiusbar", Menu=menus);

MENSA <- rbind(MENSA, newline)}

if(i ==12) { menus <- menus[menus$Menülinie=="GARDEN","Details"];

newline <- data.frame(Place="Polyterrasse", Menu=menus);

MENSA <- rbind(MENSA, newline)}

if(i ==13) { menus <- menus[menus$Menülinie=="Hausgemachte Pizza","Details"];

newline <- data.frame(Place="Polysnack", Menu=menus);

MENSA <- rbind(MENSA, newline)}

if(i ==14) { menus <- menus[3,"Details"];

newline <- data.frame(Place="Tannenbar", Menu=menus);

MENSA <- rbind(MENSA, newline)}

if(i==28) {menus <- menus[3, "Details"];

newline <- data.frame(Place="Food&Lab", Menu=menus);

MENSA <- rbind(MENSA, newline)}

if(i==11) {menus <- menus[menus$Menülinie=="Favorite","Details"];

newline <- data.frame(Place="GESS-bar", Menu=menus);

MENSA <- rbind(MENSA, newline)}

}#end of for

#and plot the results in a table

wrap_strings <- function(vector_of_strings,width){sapply(vector_of_strings, FUN = function(x){paste(strwrap(x,width = width), collapse="\n")})}

MENSA$Menu <- wrap_strings(MENSA$Menu, 60)

dev.off() # in case there is another plot open at the moment

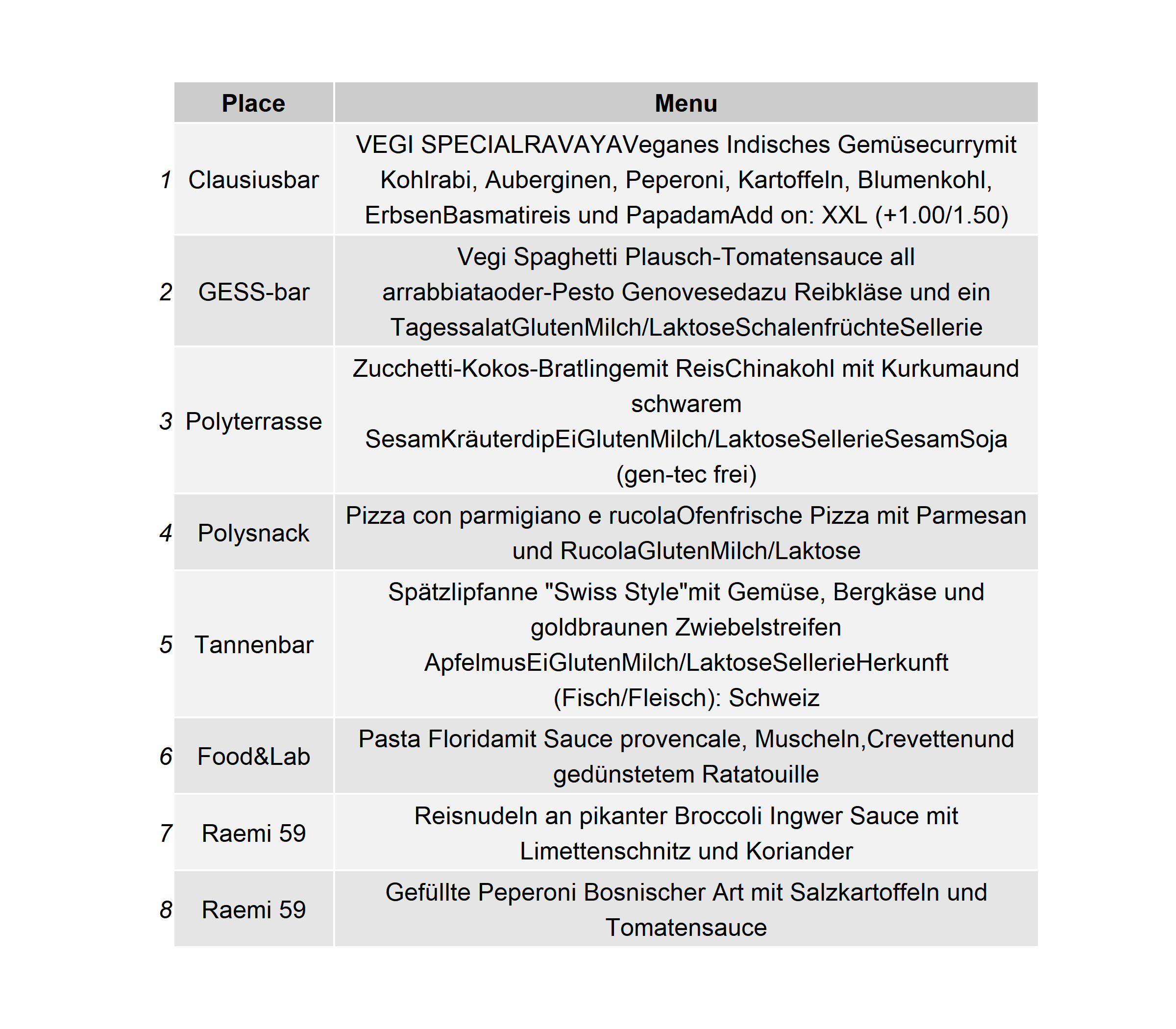

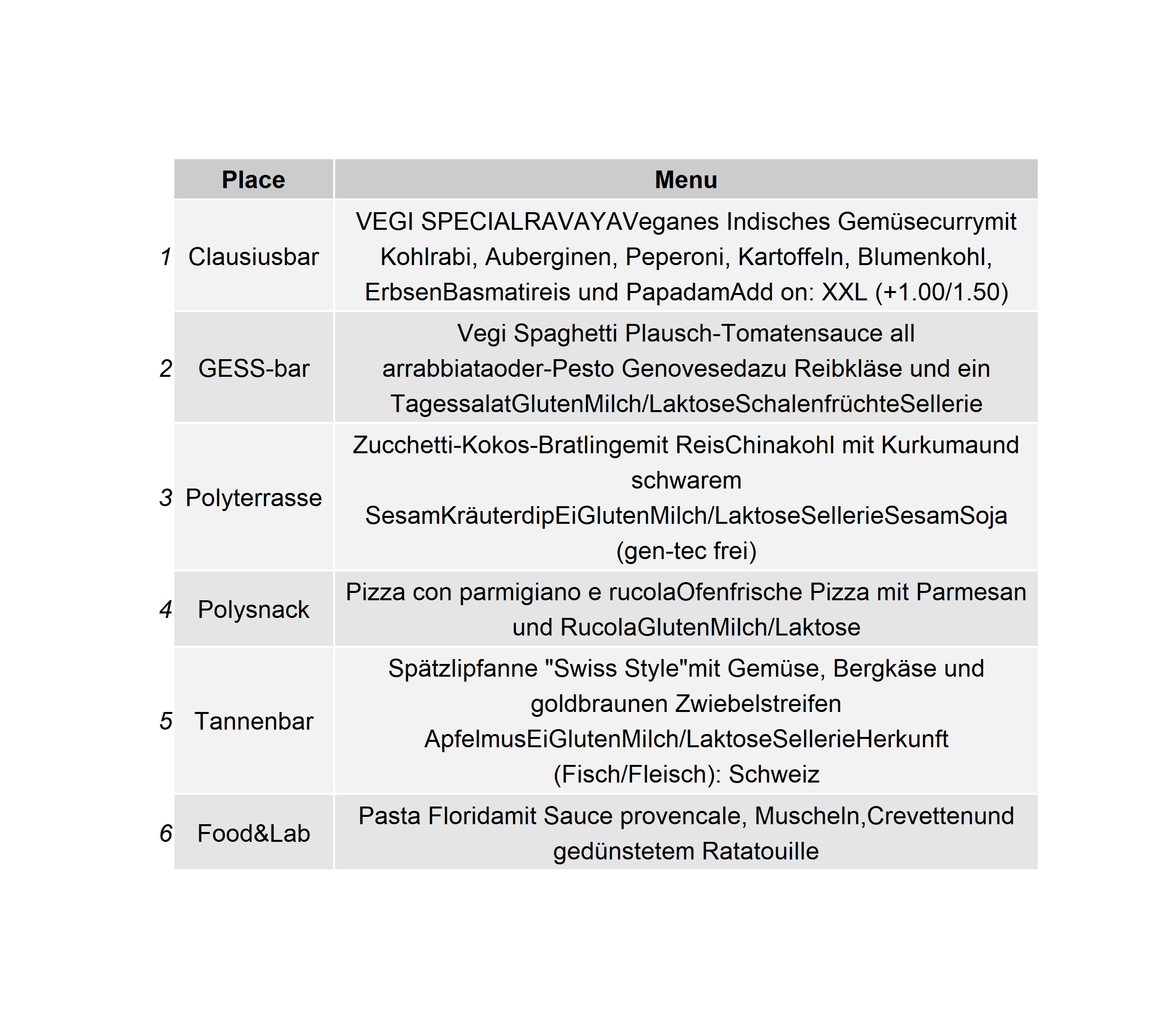

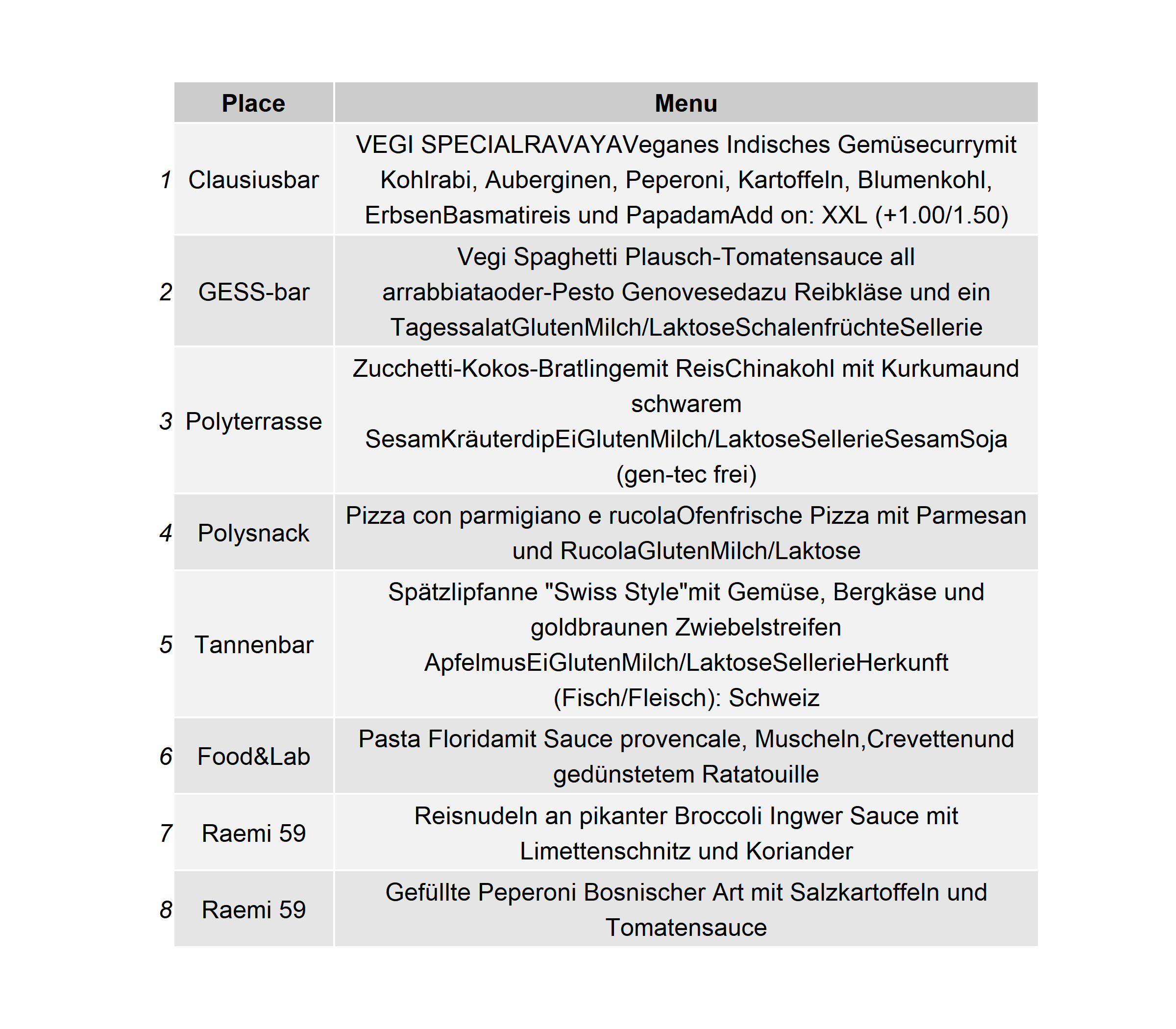

grid.table(MENSA) Admittedly, the selection of vegetarian options does not work perfect for every canteen, as the menu lines are sometimes not clear, and the pizza 🍕 at the Polysnack restaurant can be vegetarian or not.

Admittedly, the selection of vegetarian options does not work perfect for every canteen, as the menu lines are sometimes not clear, and the pizza 🍕 at the Polysnack restaurant can be vegetarian or not.

Finally, I also added the offers of the vegetarian mensa of the Uni Zürich. Its URL has a little different structure, as well as the content, for which I now used the CSS selector “.text-basics” to extract the menus.

#Add Raemi 59

raemi <- paste0("https://www.mensa.uzh.ch/de/menueplaene/raemi59/",tolower(weekdays(as.Date(today))),".html")

webpage <- read_html(raemi)

menus <- webpage %>% html_nodes(".text-basics") %>% html_text()

menus <- gsub("\\t","",menus[2])

menus <- strsplit(menus, "\n")[[1]][c(5,18)]

newline <- data.frame(Place=rep("Raemi 59",2), Menu=menus)

MENSA <- rbind(MENSA, newline)

#

wrap_strings <- function(vector_of_strings,width){sapply(vector_of_strings, FUN = function(x){paste(strwrap(x,width = width), collapse="\n")})}

MENSA$Menu <- wrap_strings(MENSA$Menu, 60)

dev.off() # in case there is another plot open at the moment

grid.table(MENSA)

Also, the result is not perfect in terms of unnecessary words and strange spacing of some words. However, it works for me to yield a quick overview of all the daily offers. Here is the complete code, in case you just want to copy it directly and start finding your daily lunch 🍝. It should be easily adaptable to the canteens at ETH Hönggerberg:

library(rvest)

library(gridExtra)

library(grid)

#remotes::install_github("dmi3kno/polite") # to install

library(polite)

# you could ignore the etiquette; which makes it faster. Then you have to replace "polite_read_html" by "read_html"

# the polite package also requires some functions (see https://www.ddrive.no/post/be-nice-on-the-web/)

`%||%` <- function(lhs, rhs) {

if (!is.null(lhs) && length(lhs) > 0) lhs else rhs

}

polite_fetch_rtxt <- memoise::memoise(

function(..., user_agent, delay, verbose){

rt <- robotstxt::robotstxt(...)

delay_df <- rt$crawl_delay

crawldelays <- as.numeric(

delay_df[with(delay_df,useragent==user_agent),"value"]) %||%

as.numeric(delay_df[with(delay_df, useragent=="*"), "value"]) %||% 0

rt$delay_rate <- max(crawldelays, delay, 1)

if(verbose){

message("Bowing to: ", rt$domain)

message("There's ",nrow(delay_df),

" crawl delay rule(s) defined for this host.")

message("Your rate will be set to 1 request every ",

rt$delay_rate," second(s).")}

rt

})

check_rtxt <-function(url, delay, user_agent, force, verbose){

url_parsed <- httr::parse_url(url)

host_url <- paste0(url_parsed$scheme, "://", url_parsed$hostname)

rt <- polite_fetch_rtxt(host_url, force=force, user_agent=user_agent,

delay=delay, verbose=verbose)

is_scrapable <- rt$check(paths=url_parsed$path, bot=user_agent)

if(is_scrapable)

Sys.sleep(rt$delay_rate)

else

warning("robots.txt says this path is NOT scrapable for your user agent!")

is_scrapable

}

polite_read_html <- memoise::memoise(

function(url, ..., delay = 5,

user_agent=paste0("polite ", getOption("HTTPUserAgent"), "bot"),

force = FALSE, verbose=FALSE){

if(!check_rtxt(url, delay, user_agent, force, verbose)){

return(NULL)

}

old_ua <- getOption("HTTPUserAgent")

options("HTTPUserAgent"= user_agent)

if(verbose) message("Scraping: ", url)

res <- httr::GET(url, ...)

options("HTTPUserAgent"= old_ua)

httr::content(res)

})

###########################

base <- "https://www.ethz.ch/de/campus/erleben/gastronomie-und-einkaufen/gastronomie/menueplaene/offerDay.html?language=de&id="

# ids

# 4 = Clausiusbar

# 6 = Dozentenfoyer

# 12 = Polyterrasse

# 13 = Polysnack

# 14 = Tannenbar

# 28 = Food&Lab

# 11 = GESSbar

today <- format(Sys.time(), "%Y-%m-%d")

# to get the URL

paste0(base, 4, "&date=", today)

MENSA <- data.frame(Place=NULL, Menu=NULL)

Menu <- NA

for(i in c(4,11,12,13,14,28)){ # all the canteen id's

path <- paste0(base, i, "&date=", today )

webpage <- polite_read_html(path)

menus <- webpage %>% html_table(fill=TRUE, header=TRUE)

menus <- data.frame(menus)

menus$Details <- gsub("Details einblenden","", menus$Details)

menus$Details <- gsub("Add on: XXL (+1.00/1.50)","", menus$Details)

if(i ==4) { menus <- menus[menus$Menülinie=="GARDEN","Details"];

newline <- data.frame(Place="Clausiusbar", Menu=menus);

MENSA <- rbind(MENSA, newline)}

if(i ==12) { menus <- menus[menus$Menülinie=="GARDEN","Details"];

newline <- data.frame(Place="Polyterrasse", Menu=menus);

MENSA <- rbind(MENSA, newline)}

if(i ==13) { menus <- menus[menus$Menülinie=="Hausgemachte Pizza","Details"];

newline <- data.frame(Place="Polysnack", Menu=menus);

MENSA <- rbind(MENSA, newline)}

if(i ==14) { menus <- menus[3,"Details"];

newline <- data.frame(Place="Tannenbar", Menu=menus);

MENSA <- rbind(MENSA, newline)}

if(i==28) {menus <- menus[3, "Details"];

newline <- data.frame(Place="Food&Lab", Menu=menus);

MENSA <- rbind(MENSA, newline)}

if(i==11) {menus <- menus[menus$Menülinie=="Favorite","Details"];

newline <- data.frame(Place="GESS-bar", Menu=menus);

MENSA <- rbind(MENSA, newline)}

}#end of for

#Add Raemi 59

raemi <- paste0("https://www.mensa.uzh.ch/de/menueplaene/raemi59/",tolower(weekdays(as.Date(today))),".html")

webpage <- read_html(raemi)

menus <- webpage %>% html_nodes(".text-basics") %>% html_text()

menus <- gsub("\\t","",menus[2])

menus <- strsplit(menus, "\n")[[1]][c(5,18)]

newline <- data.frame(Place=rep("Raemi 59",2), Menu=menus)

MENSA <- rbind(MENSA, newline)

#

#and plot the results in a table

wrap_strings <- function(vector_of_strings,width){sapply(vector_of_strings, FUN = function(x){paste(strwrap(x,width = width), collapse="\n")})}

MENSA$Menu <- wrap_strings(MENSA$Menu, 60)

dev.off() # in case there is another plot open at the moment

grid.table(MENSA)